What is Service Mesh

In this tutorial, we are going to discuss about basics of service mesh and popular Service Mesh solutions.

If you are in modern software design field, you might have heard of service mesh architectures primarily in the context of Micro services.

What is Service Mesh?

Service mesh is a popular solution for managing communication between the individual micro services in a micro service application.

Why do we need a dedicated tool?

When we move from monolithic application to micro services application, they are couple of new challenges are faced that we didn’t have with monolithic application.

Let’s we have a online shop application which is made up of several micro services. We have web server that gets the user interface requests, payment micro service that handles payments, shopping cart micro service, database micro service and some other micro services.

So we deployed our micro service application in Kubernetes cluster environment. Now what does our Kubernetes setup needs to run successfully and what are the some required configurations for such an application?

First of all each micro service has its own business logic. For example, payment service handles the payments, web server handles UI requests, database handles persist data etc.

1. Communication

So here one service needs to talk with each other. When user puts stuff to shopping cart, requests receive by the web server which forwards to the payment micro service which will talk to the database to persist data.

So how does service know talk to each other? What is the end point of each service? All the service end points that web server talks to must be configured for web server.

Now when you deploy the new micro service then we need to configure end points of that new service in all the micro services that needs to talk to it.

So we have that information is part of application deployment code.

2. Security

Now what about security in our micro service application setup.?

Generally a common environment in many projects will look like this. We have firewall rules setup for your cluster, may be we have proxy as entry point that gets requests that can cluster access directly.

So we have security around the cluster, how ever once request gets inside the cluster the communication is insecure. Micro services talked to each other over http or insecure protocol. Also service talked to each other freely.

Every service inside the cluster can talk to any other service. So there is no restrictions on that. So there is no additional security inside the cluster.

May be in smaller companies it is okay. But in more important applications like online banks where lots of personal data is present. So here security is very important. So your application must be secure as much as possible.

Hence there are additional configuration require inside each application is needed to secure communication between the services running inside the cluster.

3. Retry logic

We also need retry logic in each micro service to make the whole application robust. If one micro service is not reachable or you loose connection. For that you need to retry the connection.

So developers need write this retry logic in each micro service.

4. Metrics and Tracing

What about metrics for your service? You want able to monitor how your micro services are performing, what http errors occur in your micro services, how many requests your micro service is receiving and sending in your application.

So here development team may add monitoring logic in your micro service application.

So here these all non business logic must be added in your application and may be configure some additional configurations in the cluster to handle. All these are very important challenges in micro service application.

In this means that developer of a micro service application didn’t work on actual service/application logic and also adds complexity to the services by adding additional non business logic, configurations, metrics etc.

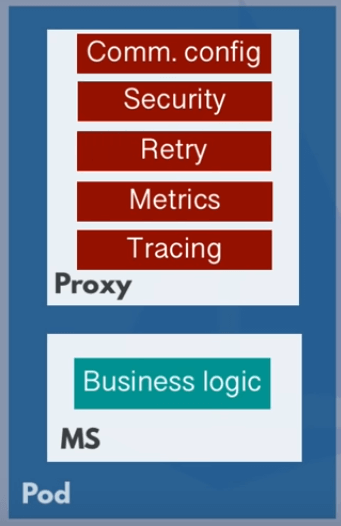

Now only make more sense is extract all non business logic to out of micro service and into its own small sidecar application that handles all these logic and acts as a proxy.

Service mesh control plane automatically injects this sidecar proxy in every micro service.

Now the micro service is talk to each other through those proxies.

The service mesh provides micro service discovery, traffic management, load balancing, encryption, authentication, and authorization that are flexible, reliable, and fast.

The service mesh is usually implemented by providing a proxy instance, called a sidecar, for each service instance.

Sidecars handle inter-service communications, monitoring, security‑related concerns – anything that can be abstracted away from the individual services.

So this way, developers can focus on development, support, and maintenance for the application code in the services; operations can maintain the service mesh and run the app.

With a Service Mesh, you can split the business logic of the application from observability and network and security policies.

The Service Mesh will enable you to connect, secure, and monitor your micro services.

1. Connect

A Service Mesh provides a way for services to discover and talk to each other. It allows for more effective routing to manage the flow of traffic and API calls between services/endpoints.

2. Secure

A Service Mesh offers you reliable communication between services. You can use a Service Mesh to enforce policies to allow or deny the connection. For example, you can configure a system to deny access to production services from a client service running in a development environment.

3. Monitor

A Service Mesh enables the visibility of your microservices system. Service Mesh can integrate with out-of-the-box monitoring tools such as Prometheus and Jaeger.

How a Service Mesh and Kubernetes Work Together

If you are deploying only a base Kubernetes cluster without a Service Mesh, you will run into the following issues:

- There is no security between services.

- Tracing a service latency problem is a severe challenge.

- Load balancing is limited.

As you can see, a Service Mesh adds a missing layer currently absent in Kubernetes. In other words, a service mesh complements Kubernetes.

When it comes to service mesh adoption, Istio and Linkerd are more established. Yet many other options exist, including Consul Connect, Kuma, AWS App Mesh, and OpenShift. Below, here are the key features from nine service mesh offerings.