Network Namespaces

In this tutorial, we are going to discuss about network namespaces in Linux. Network namespaces are used by containers like Docker to implement network isolation.

We’ll start with a simple host. As we know already containers are separated from the underlying host using namespaces. So what are the namespaces?

If your host was your house then namespaces are rooms within the house that you assign to each of your children. The room helps in providing privacy to each child. Each child can only see what’s within his or her room. They cannot see what happens outside their room as far as they’re concerned. They’re the only person living in the house.

However as a parent you have visibility into all the rooms in the House as well as other areas of the house. If you wish you can establish connectivity between two rooms in the house.

Process Namespace

When you create a container you want to make sure that it is isolated that it does not see any other processes on the host or any other containers.

So we create a special room for it on our host using an namespace as far as the container is concerned. It only sees the processes run by it and thinks that it is on its own host.

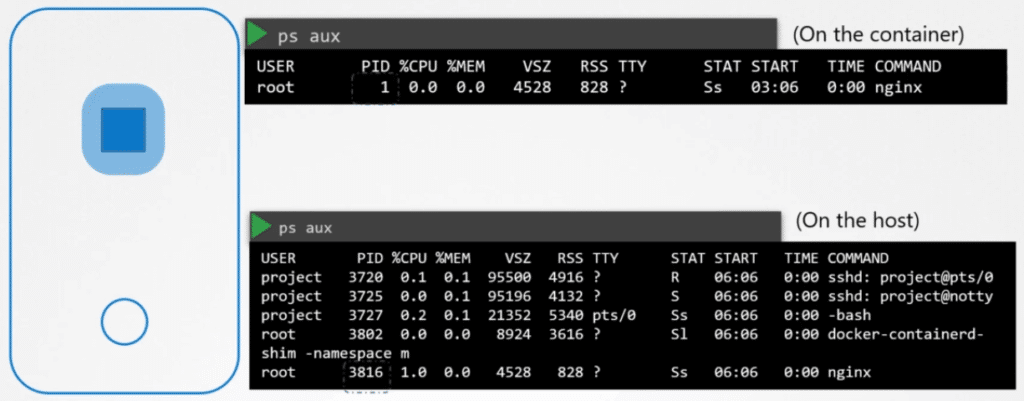

The underlying host however has visibility into all of the processes including those running inside the containers. This can be seen when you list the processes from within the container. You see a single process with the process ID of 1.

When you list the same processes as a root user from the underlying host, You see all the other processes along with the process running inside the container. This time with a different process ID.

It’s the same process running with different process ideas inside and outside the container. That’s how namespaces works.

Network Namespaces

When it comes to networking Our host has its own interfaces that connect to the local area network. Our host has its own routing and ARP table with information about rest of the network.

We want to see all of those details from the container. When the container is created. We create a network namespace for it. That way, it has no visibility to any network related information on the host. Within its namespace the container can have its own virtual interfaces routing and ARP tables. The container has its own interface.

Create Network Namespace

To create a new network namespace on a Linux host run the following command.

ip netns add red

ip netns add blueIn the above case we created network namespaces red and blue. To list the network namespace run the following command.

ip netns

red

blueExec in Network Namespace

To list the interfaces on my host, I run the ip link command. I see that my host has to look back interface and eth0 interface.

$ ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: mtu 1500 qdisc fq_codel state DOWN mode DEFAULT group default qlen 1000

link/ether 10:65:30:33:bc:96 brd ff:ff:ff:ff:ff:ffNow how do we view the same within the network namespace that we created? How do we run the same command within the red or blue namespace? Pre-fix the command with the command ip netns exec followed by the namespace name which is red. Now the IP link command will be executed inside the red namespace.

$ ip netns exec red ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00Another way to do it is to add the dash and option to the original ip link command.

$ ip -n red link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00Both of these are the same. The second one is simpler but remember this only works if you intend to run the ip command inside the namespace.

As you can see it only lists the loopback interface. You cannot see the eth0 interface on the host. So with namespace as we have successfully prevented the container from seeing the hosts interface. The same is true with the ARP table.

ARP and Routing Table

If you run the ARP command on the host, you see a list of entries. But if you run it inside the container you see no entries and the same for routing table.

On the host

$ arp

Address HWtype HWaddress Flags Mask Iface

172.17.0.21 ether 02:42:ac:11:00:15 C ens3

172.17.0.55 ether 02:42:ac:11:00:37 C ens3On the Network Namespace

$ ip netns exec red arp

Address HWtype HWaddress Flags Mask Iface

$ ip netns exec blue arp

Address HWtype HWaddress Flags Mask IfaceHost

$ route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default _gateway 0.0.0.0 UG 600 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0On the Network Namespace

$ ip netns exec red route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

$ ip netns exec blue route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use IfaceNow as of now these network namespaces have no network connectivity. They have no interfaces of their own and they cannot see the underlying hosts network.

Virtual Cable

Let’s first look at establishing connectivity between the namespaces themselves. Just like how we would connect to physical machines together using a cable to an ethernet interface on each machine, you can connect to namespaces together using a virtual Ethernet pair or a virtual cable.

Its often referred to as a pipe. But I’d like to call it a virtual cable with two interfaces on either ends. To create the cable, run the following command

$ ip link add veth-red type veth peer name veth-blueAttach interface

The next step is to attach each interface to the appropriate namespace. Use the command following command to do that.

$ ip link set veth-red netns redSimilarly attach the blue interface to the blue namespace. We can then assign IP addresses to each of these names faces.

We will use the usual ip addr command to assign the IP address but within each namespace, we will assign the red namespace IP 192.168.15.1. With an assigned a blue namespace an IP 192.168.15.2.

$ ip -n red addr add 192.168.15.1 dev veth-red

$ ip -n blue addr add 192.168.15.2 dev veth-blueWe then bring up the interface using the ip link set up command for each device within the respective namespace.

$ ip -n red link set veth-red up

$ ip -n blue link set veth-blue upNow the links are up and the namespace can now reach each other. Try a ping from the Red namespace reach the IP of the blue.

$ ip netns exec red ping 192.168.15.2

PING 192.168.15.2 (192.168.15.2) 56(84) bytes of data.

64 bytes from 192.168.15.2: icmp_seq=1 ttl=64 time=0.035 ms

64 bytes from 192.168.15.2: icmp_seq=2 ttl=64 time=0.046 msIf you look at the ARP table on the red namespace you see it’s identified its blue number at 192.168.1.2 with the MAC address. Similarly, if you list the ARP table on the blue namespace you see it’s identified it’s red neighbor.

$ ip netns exec red arp

Address HWtype HWaddress Flags Mask Iface

192.168.15.2 ether da:a7:29:c4:5a:45 C veth-red

$ ip netns exec blue arp

Address HWtype HWaddress Flags Mask Iface

192.168.15.1 ether 92:d1:52:38:c8:bc C veth-blueIf you compare this with the ARP table of the host, you see that the host’s ARP table has no idea about this new namespace we have created. And no idea about the interfaces we created in them.

Now that worked when you had just to namespaces. What do you do when you have more of them? How do you enable all of them to communicate with each other? Just like in the physical world, you create a virtual network inside your host.

To create a network you need a switch. So to create a virtual network you need a virtual switch. So you create a virtual switch within our host and connect the namespace to it.

Create a virtual switch

How do you create a virtual switch within a host? There are multiple solutions available. Such as a native solution called as Linux Bridge and the Open vSwitch etc. In this tutorial, we will use the Linux Bridge option.

Linux Bridge

To create an internal bridge network, we add a new interface to the host using the ip link add command with the type set to bridge. We will name it v-net-0.

$ ip link add v-net-0 type bridgeAs far as our host is concerned it is just another interface. Just like the eth0 interface. It appears in the output of the ip link command along with the other interfaces. It is currently down so you need to turn it up.

Use the ip link set dev up command to bring it up.

ip link set dev v-net-0 upNow for the namespace as this interface is like a switch that it can connect to. So think of it as an interface for the host and a switch for the namespaces. So the next step is to connect the namespaces to this new virtual network switch.

Earlier we created the cable or the eth pair with the veth-red interface on one end and blue interface on the other because we wanted to connect the two namespaces directly.

Now we will be connecting all named spaces to the bridged network. So we need new cables for that purpose. This cable doesn’t make sense anymore. So we will get rid of it.

Delete Cable

Use the ip link delete command to delete the cable.

$ ip -n red link del veth-redWhen you delete the link with one end the other end gets deleted automatically. Since they are a pair.

Creating a virtual cabel

Let us now create new cables to connect the namespace to the bridge. Run the ip link add command and create a pair with veth-red on one end, like before, but this time the other end will be named veth-red-br.

As it connects to the bridge network, named veth-red-br. This naming convention will help us easily identify the interfaces that associate to the red namespace.

$ ip link add veth-red type veth peer name veth-red-brSimilarly create a cable to connect the blue namespace to the bridge network. Now that we have the cables ready it’s time to get them connected to the namespaces.

$ ip link add veth-blue type veth peer name veth-blue-brSet with the network namespaces

To attach one end of this of the interface to the red namespace. run the IP link set veth-red netns red command. To attach the other end to the bridge network, run the ip link set command on the veth-red-br end and specify the master for it as the v-net-0 network.

$ ip link set veth-red netns red

$ ip link set veth-red-br master v-net-0Follow the same procedure to test as the blue cable to the blue namespace and the bridge network.

$ ip link set veth-blue netns blue

$ ip link set veth-blue-br master v-net-0Add an IP address

Let us now set IP addresses for these links and turn them up. We will use the same IP addresses that we used before, 192.168.15.1 and 192.168.15.2.

$ ip -n red addr add 192.168.15.1/24 dev veth-red

$ ip -n blue addr add 192.168.15.2/24 dev veth-blueAnd finally, turn up the devices.

$ ip -n red link set veth-red up

$ ip -n blue link set veth-blue upThe containers can now reach each other over the network. So we follow the same procedure to connect the remaining two namespaces to the same network.

We now have all 4 namespaces connected to our internal branch network and they can all communicate with each other. They have all IP addresses 192.168.15.1, 192.168.15.2, 192.168.15.3 and 192.168.15.4. And remember we assigned our host the IP 192.168.1.2.

From my host, what if I tried to reach one of these interfaces in these namespaces? Would it work? No. My host is on one network and the namespaces are on another.

$ ping 192.168.15.1

Not reachableEstablish connectivity between host and namespaces

What if I really want to establish connectivity between my host and these namespaces? Remember, we said that the bridge switch is actually a network interface for the host. So we do have an interface on the 192.168.15 network on our host.

Since it’s just another interface all we need to do is assign an IP address to it, so we can reach the namespaces through it. Run the ip addr command to set the IP 192.168.15.5 to this interface.

ip addr add 192.168.15.5/24 dev v-net-0We can now ping the red namespace from our local host.

Now remember this entire network is still private and restricted within the host. From within the namespaces, you can’t reach the outside world, nor can anyone from the outside world reach the services or applications hosted inside the only door to the outside world is the Internet port on the host.

Bridge to reach the LAN network

So how do we configure this bridge to reach the LAN network through the Ethernet port. Say there is another host attached to our LAN network with the address 192.168.1.3.

How can I reach this host from within my namespaces? What happens if I try to ping this host from my blue namespace? The blue namespace sees that I am trying to reach a network at 192.168.1 which is different from my current network of 192.168.15.

So it looks at its routing table to see how to find that network. The routing table has no information about other network so it comes back saying that the network is unreachable. So we need to add an entry into the routing table to provide a gateway or door to the outside world.

So how do we find that gateway? A door or gateway, As we discussed before is a system on the local network that connects to the other network. So what is a system that has one interface on the network local to the blue namespace, which is the 192.168.15 network and is also connected to the outside LAN network?

Here’s a logical view. It’s the local hosts that have all these namespaces on. So you can ping the namespaces. Remember our local host has an interface to attach the private network so you can ping the namespaces? So our local host is the gateway that connects the two networks together.

Add a route entry

We can now add a route entry in the blue namespace to say route all traffic to the 192.168.1. network through the gateway at 192.168.15.5.

$ ip netns exec blue ip route add 192.168.1.0/24 via 192.168.15.5Now remember our host has two IP addresses, one on the bridge network at 192.168.15.5 and another on the external network at 192.168.1.2.

Can you use any in the route? No, because the blue namespace can only reach the gateway in its local network at 192.168.15.5. The default gateway should be reachable from your namespace when you add it to your route. When you try to ping now you no longer get the Network unreachable message.

$ ip netns exec blue ping 192.168.1.3

PING 192.168.1.3 (192.168.1.3) 56(84) bytes of data.But here you still don’t get any response back from the ping. What might be the problem? We talked about a similar situation in one of our earlier tutorial where from our home network we tried reach the external internet through our router.

Our home network has our internal private IP addresses that the destination network don’t know about so they cannot reach back. For this, we need NAT enabled on our host acting as the gateway here so that it can send the messages to the LAN in its own name with its own address.

Add NAT functionality to our host

So how do we add NAT functionality to our host? You should do that using IP tables. Add a new rule in the NAT IP table in the POSTROUTING chain to masquerade or replace the from address on all packets coming from the source network 192.168.15.0 with its own IP address.

$ iptables -t nat -A POSTROUTING -s 192.168.15.0/24 -j MASQUERADEThat way anyone receiving these packets outside the network will think that they are coming from the host and not from within the namespaces.

When we try to ping now, we see that we are able to reach the outside world. Finally, say the LAN is connected to the internet. We want the namespaces to reach the Internet.

So we try to ping a server on the internet at 8.8.8.8 from the blue namespace. We receive a familiar message that the Network is unreachable. By now we know why that is. We look at the routing table and see that we have routes to the network 192.168.1, but not to anything else.

Since these name spaces can reach any network our host can reach. We can simply say that to reach any external network talk to our host. So we add a default gateway specifying our host.

$ ip netns exec blue ip route add default via 192.168.15.5We should now be able to reach the outside world from within these namespaces us.

Adding port forwarding rule to the iptables

Now, what about connectivity from the outside world to inside the namespaces? Say for example the blue namespace hosted a Web application on port 80.

As of now the name spaces are on an internal private network and no one from the outside world knows about that. You can only access these from the host itself.

If you try to ping the private IP of the namespace from another host on another network. You will see that it’s not reachable. Obviously, because that host doesn’t know about this private network.

In order to make that communication possible you have two options. The two options that we saw in the previous tutorial on NAT.

The first is to give away the identity of the private network to the second host. So we basically add a route entry to the second host telling that host that the network 192.168.15 can be reached through the host at 192.168.1.2. But we don’t want to do that.

The other option is to add a port forwarding rule using IP tables to say any traffic coming to port 80 on the local host is to be forwarded to port 80 on the IP assigned to the blue namespace.

$ iptables -t nat -A PREROUTING --dport 80 --to-destination 192.168.15.2:80 -j DNAT