Kubernetes Architecture

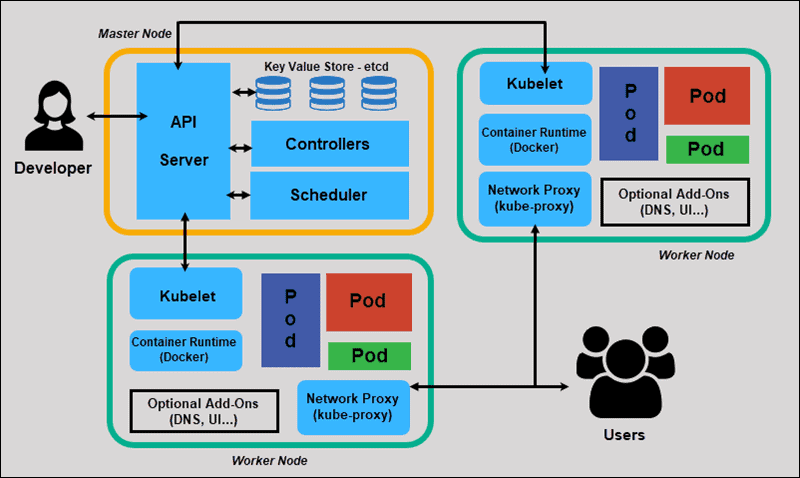

In this tutorial, we will discuss Kubernetes Architecture. Kubernetes follows master-slave architecture. Kubernetes architecture has a master node and worker nodes.

There are some basic concepts and terms related to Kubernetes (K8s) that are important to understand. This tutorial describes the essential components of Kubernetes that will provide you with the required knowledge to get started.

The purpose of the Kubernetes cluster is to host your applications in the form of containers in an automated passion. So that you can quickly deploy as many instances of your application and easily enable communication between services in your application.

So there are many things involved that work together to make this possible.

Kubernetes cluster architecture has the following main components.

- Master nodes

- Worker/Slave nodes

Master Node

The master node is responsible for managing the Kubernetes cluster by storing information regarding different nodes, planning which container goes where monitoring nodes and containers on them etc.

It is mainly the entry point for all administrative tasks. There can be more than one master node in the cluster to check for fault tolerance. More than one master node puts the system in a High Availability mode, in which one of them will be the main node in which we perform all the tasks.

For managing the cluster state, the master node uses a key-value store known as etcd in which all the master nodes connect to it.

Let us discuss the components of a master node.

- ETCD Cluster

- Kube Scheduler

- Kube API Server

- Controller Manager

- Cloud Controller Manager

1. ETCD

ETCD is a database that stores information in the form of a key-value format. It’s mainly used for shared configuration and service discovery.

2. Kube Scheduler

As the name suggests, the scheduler schedules the work to different worker nodes. It has the resource usage information for each worker node. The scheduler also considers the quality of service requirements, data locality, and many other such parameters. Then the scheduler schedules the work in terms of pods and services.

3. Kube API Server

The Kube API Server is the primary management component of the Kubernetes. This is responsible for orchestrating all operations within the cluster. It exposes the Kubernetes API, which external users use to perform management operations on the cluster and the various controllers to monitor the state of the cluster and make necessary changes as required.

4. Controller manager

The controller manager runs the following controller processes. Logically, each controller is a separate process, but they are all compiled into a single binary and run in a single process to reduce complexity.

These controllers include following

- Node Controller: The node controller takes care of nodes. These are responsible for onboarding new nodes in the cluster, handling situations where nodes become unavailable or get destroyed in the cluster.

- Replication Controller: The replication controller ensures that the desired number of containers are running at all time in the replication group.

- Endpoints Controller: Populates the Endpoints object (that is, joins Services & Pods).

- Service Account & Token controllers: Create default accounts and API access tokens for new namespaces

5. Cloud Controller Manager

Cloud controller manager runs controllers that interact with the underlying cloud providers. This binary is an alpha feature introduced in Kubernetes release 1.6 and runs cloud-provider-specific controller loops only. It would be best if you disabled these controller loops in the Kube controller manager.

As with the Kube-controller-manager, the cloud-controller-manager combines several logically independent control loops into a single binary that you run as a single process.

The following controllers can have cloud provider dependencies

- Node controller: For checking the cloud provider to determine if a node has been deleted in the cloud after it stops responding.

- Route controller: For setting up routes in the underlying cloud infrastructure.

- Service controller: For creating, updating and deleting cloud provider load balancers.

Worker/Slave nodes

A worker node is a virtual or physical server that runs the applications and is controlled by the master node. The pods are scheduled on the worker nodes, which have the necessary tools to run and connect them.

And to access the applications from the external world, you have to connect to the worker nodes and not the master nodes.

Let us discuss the components of a worker/slave nodes.

1. Container Runtime

The container runtime is the software that is responsible for running containers. Kubernetes supports several container runtimes: Docker, containerd, cri-o, rktlet, and any implementation of the Kubernetes CRI (Container Runtime Interface).

So we need the docker or its supported equivalent install on all the nodes in the cluster, including the master.

2. Kubelet

Kubelet is an agent that runs on each worker node and communicates with the master node. So, if you have 5 worker nodes, then kubelet runs on each worker node.

It listens for instructions from Kube API Server and deploys or destroys the containers on the nodes. The Kube API Server periodically fetches status reports from the kubelet to monitor the state of the node or containers.

3. Kube-proxy

Kube proxy is a proxy service that runs on each node and helps make services available to the external host. It helps in forwarding the request to correct containers and is capable of performing primitive load balancing

Kuber-proxy maintains network rules on nodes. These network rules allow network communication to your Pods from network sessions inside or outside of your cluster.

Kube-proxy uses the operating system packet filtering layer if there is one and it’s available. Otherwise, Kube-proxy forwards the traffic itself.

4. PODS

Pods are one of the crucial concepts in Kubernetes, as they are the key construct that developers interact with.

A pod is a group of one or more containers that logically go together. Pods run on nodes. Pods run together as a logical unit. So they have the same shared content. They all share the same IP address but can reach other Pods via localhost, as well as shared storage.