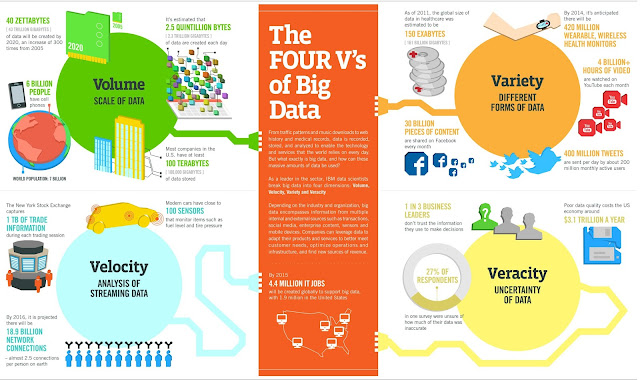

Four V’s of Big Data

Big Data is classified in terms of

- Volume

- Velocity

- Variety

- Veracity

1. Volume

Volume refers to the amount of data (Size of the data). Today data size has increased to size of terabytes in the form of records or transactions. 90% of all data ever created, was created in the past 2 years. From now on, the amount of data in the world will double every two years. By 2020, we will have 50 times the amount of data as that we had in 2011.

In the past, the creation of so much data would have caused serious problems. Nowadays, with decreasing storage costs, better storage solutions like Hadoop and the algorithms to create meaning from all that data this is not a problem at all.

Massive volumes of data is getting generated, in the range of tera bytes to zeta bytes. The data generated by machines, networks, devices, sensors, satellites, geospatial data and human interaction on systems like transaction-based data (stored through years), text, images, videos from social media, etc.

Use-Cases

- A commercial aircraft generates 3GB of flight sensor data in 1 hour.

- An ERP system for a mid-size company grow by 1-2TB annually.

- Typically a telecom operator generates 3TB of call details records (CDR) every day.

- Turn 12 terabytes of tweets created each day into improved product sentiment analysis to know the views of customer for better business

2. Velocity

The Velocity is the speed at which the data is created, stored, analyzed and visualized. In the past, when batch processing was common practice, it was normal to receive an update from the database every night or even every week. Computers and servers required substantial time to process the data and update the databases. In the big data era, data is created in real-time or near real-time. With the availability of Internet connected devices, wireless or wired, machines and devices can pass-on their data the moment it is created. Think about how many SMS messages, Facebook status updates, or credit card swipes are being sent on a particular telecom carrier every minute of every day, and you’ll have a good appreciation of velocity.

The speed at which data is created currently is almost unimaginable: Every minute we upload 100 hours of video on YouTube. In addition, every minute over 200 million emails are sent, around 20 million photos are viewed and 30.000 uploaded on Flickr, almost 300.000 tweets are sent and almost 4 to 5 million queries on Google are performed.

According to Gartner, velocity means both how fast the data is being produced and how fast the data must be processed to meet demand.

The flow of data is massive and continuous. This real-time data can help business to make decision in real time.

Use-Cases

On google if we search about travelling, shopping (electorinics, apparels, shoes, watch, etc.), job, etc. It provides us the relevant advertisement while browsing in real time.

3. Variety

Variety refers to the many sources and types of data. In the past, all data that was created was structured data, it neatly fitted in columns and rows but those days are over. Nowadays, 90% of the data that is generated by organizations unstructured data. Data today comes in many different formats: structured data, semi-structured data, unstructured data and even complex structured data.

i. Structured Data

Any data that can be stored, accessed and processed in the form of fixed format is termed as a structured data. Structured data refers to kinds of data with a high level of organization, such as information in a relational database.

E.g. Relational Data

ii. Semi-structured Data

Semi-structured data is information that doesn’t reside in a relational database but that does have some organizational properties that make it easier to analyze. With some process you can store them in relation database. Examples of semi-structured: XML and JSON documents are semi structured documents, NoSQL databases are considered as semi structured.

iii. Unstructured Data

Any data with unknown form or unknown structure is classified as unstructured data. It often includes text and multimedia content. Examples include e-mail messages, word processing documents, videos, photos, audio files, presentations, webpages and many other kinds of business documents.

4. Veracity

- Big data veracity refers to the biases, noise and abnormalities, ambiguities, latency in data.

- Is the data that is being stored and mined, meaningful to the problem being analyzed?

- Keep your data clean and processes to keep ‘dirty data’ from accumulating in your systems.

Having a lot of data in different volumes coming in at high speed is worthless if that data is incorrect. Incorrect data can cause a lot of problems for organizations as well as for consumers. Therefore, organizations need to ensure that the data is correct as well as the analyses performed on the data are correct. Especially in automated decision-making, where no human is involved anymore, you need to be sure that both the data and the analyses are correct.

Other important 2V’s of Big Data

1. Validity

The correct data and accurate are intended to use for taking decisions.

2. Volatility

Big data volatility refers to how long is data valid and how long should it be stored. In this world of real-time data, you need to determine at what point the data is no longer relevant to the current analysis.

Conventional Approaches

Storage

- RDBMS (Oracle, DB2, MySQL, etc.)

- OS File-System

Processing

- SQL Queries

- Custom framework

Problems with Traditional/Conventional Approaches

- Limited storage capacity.

- Limited processing capacity

- No scalability

- Single point of failure

- Sequential processing

- RDBMS can handle structured data

- Required pre-processing of Data